The Era of Intelligent Cloud Computing & Cloud GPU Rental

Artificial Intelligence (AI), Machine Learning (ML) & cloud computing have become the driving forces of digital transformation across industries. As models grow larger and data more complex, organisations increasingly depend on the right GPU cloud provider to deliver reliable, scalable, and high-speed computing resources. The modern cloud GPU ecosystem enables developers, researchers, and enterprises to rent world-class GPUs instantly, achieving the power once limited to elite research labs.

In 2025, the competition among GPU cloud platforms has intensified, giving rise to a new generation of flexible and transparent GPU cloud rental solutions. These services allow users to train, fine-tune, and deploy advanced AI models with minimal setup time and zero infrastructure burden. Whether it is large-scale training of a transformer model or inference optimization for real-time applications, GPU cloud servers now make high-performance machine learning accessible to everyone.

What makes this guide different is its focus on both the technology and the practical considerations that matter in 2025. Beyond raw GPU power, the leading providers now combine developer-friendly features, orchestration tools, data security, and intelligent pricing structures. This guide explores the 11 best GPU cloud providers that stand out this year, helping you identify the perfect match for your AI workloads and business goals.

Why Cloud GPU Matters for Modern Machine Learning & Cloud Computing

Training and deploying state-of-the-art machine learning models requires vast computational strength. A single large language model like GPT-5 or Gemini 2 may consume thousands of GPU hours during its training phase. This demand for performance has made the cloud GPU one of the most critical components in modern AI pipelines.

The value of using a cloud-based approach lies in the ability to scale computing resources instantly, pay only for what is used, and focus entirely on the model rather than on infrastructure management. For startups, researchers, and enterprises alike, this flexibility has redefined the economics of AI.

Key Advantages of Using GPU Cloud Providers

- Scalability and Flexibility: Cloud GPU platforms enable users to start small and expand instantly as workloads grow. Distributed training across multiple GPU nodes becomes straightforward, allowing faster results with minimal configuration.

- Cost Efficiency Through Rentals: The GPU cloud rental model allows pay-as-you-go billing that aligns perfectly with project timelines. Instead of making heavy capital investments in physical hardware, teams rent GPUs for as long as they need.

- Simplified Infrastructure Management: Providers handle hardware maintenance, networking, and updates. Users can devote their time entirely to model development, experimentation, and deployment.

- Optimised for AI and ML Frameworks: Modern GPU cloud providers offer ready-to-use environments for TensorFlow, PyTorch, JAX, and Hugging Face. These integrated setups reduce time-to-training significantly.

- Enterprise Security and Compliance: With certifications like ISO 27001 and SOC 2, cloud GPU servers provide secure environments suitable for production-scale and regulated workloads.

The GPU cloud provider has thus become a cornerstone of any serious ML operation, bridging the gap between computational demand and project feasibility.

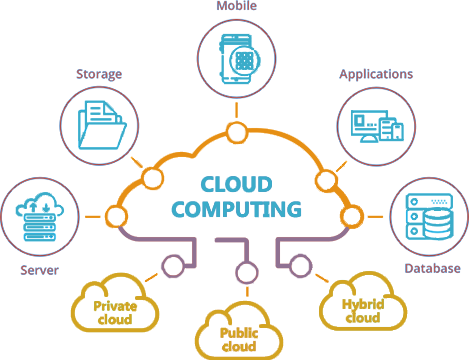

The Expanding Role of Cloud Computing in AI

Cloud computing and GPU acceleration together form the foundation of today’s AI revolution. Cloud computing provides on-demand resource allocation, while GPUs deliver the raw performance needed for parallelised computation. The combination enables teams to train, validate, and deploy large-scale models efficiently and sustainably.

How Cloud Computing Accelerates AI

- Distributed Model Training: Cloud computing allows workloads to be split across multiple GPU nodes connected by high-speed networks such as NVLink and InfiniBand. This architecture significantly reduces training times for large models.

- Hybrid and Multi-Cloud Solutions: Many organisations now adopt hybrid setups, combining public and private infrastructure. This approach ensures compliance with data residency regulations while maintaining flexibility.

- Automated Machine Learning Operations: Built-in orchestration tools automate model deployment, monitoring, and scaling. Tasks such as data ingestion, version control, and checkpointing can be handled automatically.

- Sustainability and Efficiency Gains: GPU cloud servers are increasingly designed for energy-efficient performance. Providers are adopting greener data centre practices, making large-scale AI workloads more sustainable.

In 2025, cloud computing is not simply a technological advantage but a strategic necessity. It empowers organisations to experiment continuously, iterate quickly, and innovate without being limited by hardware constraints.

Essential Criteria for Choosing the Best GPU Cloud Provider for You

Selecting a GPU cloud provider requires careful evaluation of both technical and operational aspects. Each platform offers different advantages depending on use cases such as deep learning, LLM fine-tuning, inference APIs, or hybrid deployment. The following criteria will help you choose the right platform for your machine learning needs.

1. Availability of Modern GPUs

Hardware is the foundation of GPU performance. Choosing the right GPU type can drastically affect both speed and cost. The table below summarizes the top GPU models available in 2025.

| GPU Model | Architecture | Best Use Case | Key Advantage |

|---|---|---|---|

| NVIDIA H100 (Hopper) | Hopper | Large-scale transformer and multimodal model training | Fastest GPU available for enterprise-grade AI |

| NVIDIA A100 | Ampere | General-purpose deep learning and HPC | Excellent balance of performance and cost |

| NVIDIA L40S / Ada Series | Ada Lovelace | Diffusion, generative models, medium-scale workloads | Energy-efficient and versatile |

| AMD MI300X | CDNA 3 | LLMs and open-source ML frameworks | Strong performance at competitive pricing |

| RTX 4090 / 5090 | Ada | Startups, academics, and small-scale experiments | Ideal for entry-level model prototyping |

2. Speed and Scalability

Setup time and elasticity are critical in ML workflows. Leading GPU cloud providers now deliver instance startup times of under a minute. Auto-scaling capabilities adjust compute power automatically as workloads change. Integration with Kubernetes and Docker simplifies orchestration across distributed systems.

3. Developer and MLOps Ecosystem

A strong MLOps ecosystem ensures consistency and reliability. The best providers include ready environments for PyTorch and TensorFlow, as well as Jupyter notebooks and Docker containers. Many platforms also support CI/CD pipelines that automate data ingestion, model training, and deployment.

4. Transparent and Predictable Pricing

The most competitive platforms follow clear pricing models based on usage duration, typically billed per second or per minute. Some also offer marketplace-based GPU rentals that reduce cost through community or peer-to-peer sharing.

When comparing pricing, evaluate:

- The difference between on-demand, reserved, and spot instances.

- Whether pricing includes storage, bandwidth, and data transfer costs.

- If there are discounts for long-running workloads or multi-GPU clusters.

5. Security, Reliability, and Compliance

For enterprise and production workloads, compliance cannot be compromised. Look for GPU cloud servers that provide:

- SOC 2 or ISO 27001 certified infrastructure.

- Uptime Service Level Agreements (SLAs) above 99.9%.

- Data encryption both at rest and in transit.

- Multi-region redundancy and backup options.

6. Monitoring and Observability

Real-time monitoring ensures efficient resource utilization. Providers now include interactive dashboards showing GPU usage, job logs, performance metrics, and temperature readings. Proactive alerting systems help detect anomalies before they impact production.

The New Economics of GPU Cloud Rental

The rapid expansion of AI workloads has driven significant innovation in GPU cloud rental pricing structures. Specialized providers such as RunPod, Northflank, Lambda Labs, and Hyperstack have introduced pay-as-you-go and fractional GPU rental systems that make high-performance computing accessible even to small teams.

These systems offer transparent, flexible billing models and enable users to:

- Pay only for exact usage time rather than reserved capacity.

- Access marketplace GPUs at reduced rates through real-time bidding.

- Combine compute nodes across regions for balanced performance and cost.

The democratisation of GPU cloud rentals has made it possible for startups, academic institutions, and independent researchers to compete with larger organisations by accessing the same high-end infrastructure at a fraction of the traditional cost.

Look no further

On-Demand GPU cloud rental

Dataoorts: Bridging Compute and Data in the Cloud GPU Ecosystem

Even as GPU accessibility improves, the biggest challenge for ML teams often lies in data management. Training models efficiently requires seamless data movement between storage systems and compute clusters. This is where Dataoorts has emerged as a critical enabler in the cloud AI ecosystem.

Dataoorts acts as a unified data fabric that connects cloud storage, data pipelines, and GPU clusters into one integrated workflow. Its core features include:

- Automated data versioning and lineage tracking that ensures reproducibility and transparency.

- High-speed ingestion pipelines optimised for large datasets, including images, text, and streaming inputs.

- Integration with leading MLOps and GPU cloud providers such as RunPod, Lambda, and GCP.

- Granular access control mechanisms for secure collaboration within distributed teams.

By optimising data throughput and minimising transfer delays, Dataoorts ensures that GPU clusters remain continuously utilised rather than idle. This balance of compute and data flow enhances training efficiency, shortens model iteration cycles, and provides a reliable foundation for enterprise-scale AI.

The Leading GPU Cloud Providers in 2025

As artificial intelligence and deep learning applications evolve, the competition among GPU cloud providers has intensified. Each platform offers distinct advantages in pricing, scalability, and hardware configuration. For businesses and developers, choosing the right cloud GPU solution can determine both the speed of deployment and the overall cost of ownership.

Below is a detailed breakdown of the top GPU cloud providers that have established leadership positions in 2025, along with comparative insights on performance, pricing, and flexibility.

1. Amazon Web Services (AWS)

AWS remains a top GPU cloud provider due to its sheer scale and variety of GPU options. The EC2 P4d and P5 instances deliver high performance with NVIDIA A100 and H100 GPUs. AWS also supports large-scale model training with optimized networking through Elastic Fabric Adapter (EFA), which significantly reduces latency between nodes.

Key Features

- Offers A100 and H100 GPUs for deep learning and generative AI.

- Supports distributed training with low-latency EFA interconnects.

- Provides tight integration with SageMaker for managed machine learning workflows.

- Pay-as-you-go model ideal for both startups and enterprise AI labs.

Pricing Snapshot

| Instance Type | GPU Model | On-Demand Hourly Rate (USD) | Use Case |

|---|---|---|---|

| P4d | NVIDIA A100 | ~$32.77/hr | Large-scale AI training |

| G6e | NVIDIA L4 | ~$2.70/hr | Inferencing and lightweight training |

AWS provides flexible GPU cloud rental options, but cost optimization often requires commitment plans such as Savings Plans or Spot Instances.

2. Google Cloud Platform (GCP)

Google Cloud’s AI-focused infrastructure continues to set industry benchmarks with its A3 GPU instances powered by NVIDIA H100 Tensor Core GPUs. Its unified AI ecosystem integrates TensorFlow, Vertex AI, and TPU accelerators, allowing enterprises to run hybrid or multi-framework workloads seamlessly.

Key Features

- Scalable GPU configurations with A100 and H100 support.

- Integration with TensorFlow, JAX, and PyTorch for seamless deployment.

- Vertex AI simplifies MLOps through an end-to-end managed pipeline.

- Global network delivers high bandwidth for data-heavy applications.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Best For |

|---|---|---|---|

| A3 | NVIDIA H100 | ~$31.60/hr | Enterprise AI training |

| A2 | NVIDIA A100 | ~$27.20/hr | Deep learning and research workloads |

Google Cloud’s flexible billing makes it a strong choice for teams requiring hybrid workloads across both TPUs and GPUs.

3. Microsoft Azure

Microsoft Azure provides an enterprise-friendly environment for deploying AI models at scale. The NDv5 and NC H100 v5 instances are among the most powerful GPU cloud servers in 2025. Azure’s close integration with Microsoft Fabric, Synapse, and OpenAI APIs makes it an ideal choice for businesses investing in generative AI.

Key Features

- Offers NVIDIA H100, A100, and MI300X GPUs for varied workloads.

- Supports large model fine-tuning through integrated MLOps pipelines.

- Secure and compliant cloud environment suitable for regulated industries.

- Flexible GPU cloud rental and subscription pricing models.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| NDv5 | NVIDIA H100 | ~$33.20/hr | Advanced model training |

| NCv3 | NVIDIA V100 | ~$6.90/hr | Research and small batch training |

Azure stands out for its strong enterprise ecosystem and support for hybrid on-premise deployments.

4. Dataoorts

Dataoorts has emerged as an innovative GPU cloud provider, offering a balance between affordability, performance, and advanced automation. The platform focuses on empowering startups, AI researchers, and growing enterprises with simplified GPU cloud rental access. Its infrastructure supports multi-GPU scaling, GPU orchestration, on-demand compute and AI workload optimization, all under a user-friendly interface.

Why Dataoorts is Gaining Attention

- Provides transparent pricing and easy GPU cloud server rentals with instant setup.

- Offers a wide range of GPU options including A100, A40, and RTX 6000.

- Includes automated deployment tools for AI and deep learning pipelines.

- Focused on data-intensive use cases in AI, cloud computing, and simulation workloads.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| D-A100 | NVIDIA A100 | ~$1.196/hr | Training large-scale models |

| D-RTX | RTX 6000 | ~$2.94/hr | Rendering and medium-scale AI tasks |

Dataoorts differentiates itself by blending transparent GPU rental pricing with real-time monitoring and scaling support, allowing users to control costs efficiently without sacrificing performance.

5. Lambda Labs

Lambda Labs is a specialized GPU cloud provider built for deep learning researchers and AI startups. Its Lambda Cloud platform focuses on simplicity and cost-effectiveness, offering easy deployment of frameworks like PyTorch, TensorFlow, and JAX out of the box.

Key Features

- Optimized GPU cloud servers for AI workloads with NVIDIA A100 and H100 options.

- Pre-configured deep learning environments.

- Low-latency networking designed for distributed training.

- Supports on-demand and reserved GPU cloud rentals.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| Lambda A100 | NVIDIA A100 | ~$20.48/hr | Deep learning research |

| Lambda H100 | NVIDIA H100 | ~$27.00/hr | Generative AI workloads |

Lambda Labs provides a strong balance between affordability and research-focused configurability.

6. Vast.ai

Vast.ai is one of the most affordable GPU cloud providers available in 2025, offering a decentralized marketplace model that connects users directly with independent compute suppliers. This peer-to-peer approach allows vast cost flexibility and ensures that developers, data scientists, and researchers can access high-performance GPUs at a fraction of the usual price. The platform focuses on transparency and efficiency, making GPU cloud rentals highly customizable for every project.

Key Features

• Peer-to-peer GPU cloud rental marketplace with dynamic pricing.

• Supports popular ML frameworks including PyTorch, TensorFlow, and JAX.

• Provides access to GPU cloud servers featuring NVIDIA RTX 4090, A100, H100, and AMD MI300X.

• Docker-based deployment for fast configuration and compatibility with existing workflows.

• Transparent benchmarking and uptime reporting for informed selection.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| Vast Basic | NVIDIA RTX 4090 | ~0.38/hr | Small-scale ML experiments |

| Vast Premium | NVIDIA H100 | ~1.95/hr | Large model training |

Vast.ai stands out in the cloud computing ecosystem for offering high-end GPUs at marketplace-driven prices, ideal for startups and academic researchers seeking economical scaling options.

7. CoreWeave

CoreWeave is an enterprise-grade GPU cloud provider specializing in large-scale AI workloads, inference APIs, and model deployment. It is widely recognized for its performance, uptime reliability, and extensive developer tooling. CoreWeave’s cloud GPU infrastructure is optimized for deep learning, rendering, and generative AI training, making it a trusted platform for advanced ML teams.

Key Features

• Provides access to high-performance GPU cloud servers with NVIDIA H100, A100, and L40S GPUs.

• Supports distributed model training with ultra-low latency networking and NVLink interconnects.

• Kubernetes-native orchestration allows effortless scaling across multi-GPU clusters.

• Enterprise-grade SLA, high availability, and security compliance for production deployments.

• Integrated data storage and API endpoints for efficient MLOps workflows.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| CW Standard | NVIDIA A100 | ~2.10/hr | Research and development |

| CW Pro | NVIDIA H100 | ~3.75/hr | Enterprise AI model training |

CoreWeave’s focus on performance, orchestration, and reliability makes it an ideal choice for businesses running critical AI applications that demand consistent uptime and power.

8. Hyperstack

Hyperstack is a next-generation GPU cloud provider offering high-speed, scalable infrastructure optimized for modern machine learning and deep learning workloads. It focuses on maximizing throughput for LLM and transformer-based training while maintaining cost efficiency.

Key Features

• NVLink-enabled cloud GPU servers featuring NVIDIA H100, A100, and RTX 6000 Ada GPUs.

• Global data center presence enabling low-latency access for distributed workloads.

• Auto-scaling support with per-minute billing and hibernation options.

• Integrated SSD and NVMe storage for high I/O data performance.

• Developer-friendly API and easy setup for rapid experimentation.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| Hyperstack A100 | NVIDIA A100 | ~2.00/hr | Multi-node training |

| Hyperstack H100 | NVIDIA H100 | ~2.60/hr | LLM and transformer fine-tuning |

Hyperstack is well-suited for teams that need powerful, flexible GPU cloud servers for deep learning pipelines and require fast provisioning with global scalability.

9. DigitalOcean (Paperspace Gradient)

DigitalOcean, through its Paperspace Gradient platform, combines simplicity and performance to offer a cloud GPU environment tailored for startups and small teams. It provides predictable pricing and one-click deployment, making it easy to build, train, and scale machine learning models without managing backend infrastructure.

Key Features

• Pre-configured Jupyter environments and integrated MLOps tools.

• Support for GPU cloud rentals with NVIDIA H100, L40S, and RTX 4000/6000 Ada.

• Kubernetes support for production deployment and automated scaling.

• Transparent billing and quick provisioning for rapid testing.

• Ideal for developers seeking low learning curve and reliable GPU performance.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| Gradient RTX 6000 | NVIDIA RTX 6000 Ada | ~0.75/hr | Model inference |

| Gradient H100 | NVIDIA H100 | ~1.99/hr | Advanced model training |

DigitalOcean’s Paperspace Gradient balances ease of use with affordability, making it a strong entry point into cloud GPU computing for small businesses and developers.

10. Northflank

Northflank is a cloud GPU provider that merges compute infrastructure with full-stack application deployment. It focuses on supporting teams building AI-powered apps through CI/CD integration, Git-based workflows, and containerized GPU deployment.

Key Features

• Unified platform for GPU cloud servers and application logic.

• Git-based deployment with automatic CI/CD integration.

• Multi-cloud compatibility across AWS, Azure, GCP, and BYOC environments.

• Access to over 18 GPU types including NVIDIA H100, L40S, and AMD MI300X.

• Auto-scaling, secure environments, and workload observability.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| Northflank L40S | NVIDIA L40S | ~1.25/hr | Application hosting with AI inference |

| Northflank H100 | NVIDIA H100 | ~2.30/hr | Full-stack model deployment |

Northflank is best suited for AI development teams seeking seamless integration between code repositories, MLOps pipelines, and GPU cloud computing environments.

11. IBM Cloud

IBM Cloud has evolved into a powerful GPU cloud provider focused on enterprise-grade AI, data science, and hybrid cloud computing solutions. It combines advanced GPU cloud servers with IBM Watson AI services, enabling organizations to build, train, and deploy large-scale machine learning models securely across on-prem and multi-cloud environments.

Key Features

• Enterprise-ready GPU cloud servers featuring NVIDIA A100, H100, and L40S GPUs.

• Deep integration with IBM Watson Machine Learning, AI Ops, and Data Fabric.

• Compliance with SOC 2, GDPR, and ISO standards, ensuring secure AI deployment.

• Hybrid cloud capabilities that connect on-prem infrastructure with public GPU cloud resources.

• Advanced data analytics integration through IBM Cloud Pak for Data.

Pricing Snapshot

| Instance Type | GPU Model | Hourly Rate (USD) | Use Case |

|---|---|---|---|

| IBM VPC GPU | NVIDIA A100 | ~2.90/hr | Machine learning model training |

| IBM Enterprise GPU | NVIDIA H100 | ~3.95/hr | AI inference and data analytics |

IBM Cloud’s combination of enterprise security, hybrid flexibility, and AI integration makes it a reliable GPU cloud server solution for organizations working on regulated or large-scale AI projects that demand both compliance and high performance.

Comparative Overview: 2025 GPU Cloud Provider Comparison

Selecting the right GPU cloud provider depends on the complexity of your workload, expected runtime, and overall scale of deployment. GPU cloud computing in 2025 has matured to serve a diverse set of users, from small startups running short experiments to large enterprises training multi-billion parameter models.

| Provider | GPU Models | Pricing (Approx. per Hour) | Best For | Key Differentiator |

|---|---|---|---|---|

| RunPod | A100, H100, MI300X, RTX 4090 | $0.34–$1.99 | Developers and researchers | Fast provisioning and low-cost pods |

| Northflank | H100, MI300X, L40S, TPUs | $0.60–$3.50 | Full-stack ML applications | CI/CD integration with hybrid deployment |

| Google Cloud (GCP) | A100, H100, L4, TPU v4/v5e | $11.00 | Enterprise-scale AI and data workloads | Deep integration with Vertex AI and TensorFlow |

| Amazon Web Services (AWS) | H100, A100, T4, V100 | $0.90–$98 | Enterprises and research labs | Scalable SageMaker training clusters |

| Hyperstack | H100, A100, L40S | $0.80–$2.50 | Deep learning and generative AI | High-speed NVLink connectivity |

| DigitalOcean (Paperspace) | H100, L40S, RTX 6000 | $0.76–$1.99 | Startups and ML teams | One-click setup and predictable pricing |

| Lambda Labs | A100, H100 | $20.00–$27.00 | AI researchers and engineers | Pre-configured frameworks and fast deployment |

| Vast.ai | RTX 4090, A100, H100 | $0.30–$1.00 | Budget-conscious users | Peer-to-peer GPU marketplace for flexibility |

| CoreWeave | A100, H100, L40S | $1.50–$3.00 | Enterprise AI and inference workloads | Optimized Kubernetes orchestration |

| Microsoft Azure | A100, H100, MI300X | $6.98 | Corporate users and regulated industries | Enterprise-grade hybrid cloud integration |

| IBM Cloud | A100, H100, L40S | $2.90–$3.95 | Data analytics and AI research | Watson AI integration and business-grade security |

This comparison reveals that 2025 offers a balanced ecosystem of GPU cloud providers. Users can now choose between on-demand GPU cloud rental for short-term experimentation or dedicated GPU cloud servers for long-term, high-performance computing.

Machine Learning Use Cases Where GPU Cloud Servers Excel

Cloud GPU infrastructure has become indispensable for accelerating machine learning projects that demand massive compute throughput and parallel processing. Below are the most impactful use cases for GPU cloud servers across industries.

1. Training Large Language Models (LLMs)

GPU cloud servers provide the computational muscle to train and fine-tune large transformer models such as GPT, LLaMA, and Mistral. Cloud computing platforms with H100 and A100 GPUs can process massive token datasets with reduced latency and memory bottlenecks, enabling faster convergence and efficient model scaling.

2. Computer Vision and Image Classification

From medical imaging to autonomous vehicles, computer vision workloads benefit immensely from GPU acceleration. Tasks such as image segmentation, object detection, and video classification run up to ten times faster on GPU cloud servers compared to CPU-based systems. Cloud GPU rental models also allow teams to scale visual recognition workloads on demand.

3. Generative AI and Content Synthesis

Generative AI has become a major workload on modern GPU clouds. Applications include image generation using diffusion models, video enhancement through GANs, and voice synthesis using advanced neural networks. GPU cloud computing enables creatives and developers to use state-of-the-art models like Stable Diffusion and Runway in real time without investing in expensive hardware.

4. Reinforcement Learning and Robotics

Cloud GPUs are widely used in reinforcement learning environments, especially for training robotic control policies and autonomous navigation systems. The ability to parallelize thousands of simulation environments across GPU nodes dramatically reduces training times and enables continuous model improvement.

5. Predictive Analytics and Forecasting

Organizations rely on cloud GPU providers to handle real-time prediction tasks such as sales forecasting, demand estimation, and anomaly detection. GPU-accelerated analytics platforms can process large datasets with complex models like XGBoost, CatBoost, and deep neural networks to deliver faster and more accurate business insights.

Emerging Trends in GPU Cloud Computing for 2025

The GPU cloud industry in 2025 continues to evolve rapidly, driven by advancements in hardware architecture, orchestration tools, and AI democratization.

- Hopper and Beyond: The NVIDIA H100 remains the benchmark for high-end training. The upcoming H200 and AMD’s MI300X promise improved efficiency and cost-performance balance, especially for training and inference at scale.

- Hybrid Cloud Adoption: Many enterprises are combining on-premise GPUs with cloud GPU rentals to balance cost, performance, and compliance. This approach enables flexible workload scheduling while reducing idle GPU time.

- Sustainability in Cloud Computing: Providers are now building data centers powered by renewable energy. Cloud computing companies like Dataoorts and Hyperstack are also optimizing cooling and energy allocation to minimize carbon footprints.

- AI as a Managed Service: The rise of fully managed AI stacks allows even non-technical teams to deploy pre-trained models. These include APIs for vision, language, and audio that are directly powered by GPU cloud servers.

Final Thoughts: Choosing the Best GPU Cloud Provider for 2025

The global shift toward cloud-based GPU computing is not merely a technological trend but a fundamental change in how AI research and innovation occur. Whether you are a data scientist, startup founder, or enterprise architect, the key is to align your choice of GPU cloud provider with your workload type, performance goals, and cost expectations.

Platforms like AWS and GCP remain ideal for enterprise-scale AI, while specialized GPU providers such as Lambda Labs and Hyperstack offer exceptional flexibility for research and development. Cost-efficient options like RunPod and Vast.ai make GPU cloud rental accessible to independent developers.

Finally, companies like Dataoorts stand out by blending affordability, scalability, and intelligent resource management. With an emphasis on customized GPU solutions and transparent pricing, Dataoorts has quickly emerged as a reliable option for organizations seeking a sustainable and performance-optimized cloud GPU platform.